Who framed George Lakoff?

Not long ago, a good friend and I had an e-mail thread about framing. He took issue with one of my definitions of framing as “genuinely communicating your beliefs.”

In his words:

“Framing” is when you successfully communicate your beliefs in the language that appeals to your intended audience. For example: tailoring your language and metaphors to veterans (“honor” and “duty”) or to union members (“solidarity” or “standing shoulder to shoulder”) or to soccer moms (“our children are our future!”). Framing means using the reference points which already exist within your audience’s mind to explain how your political issue fits into their preconceived notions.”

His view is that framing is using language to explain something to someone in a way that makes sense to how they already think. Framing is simply using the right language. This view goes something like this: We just need to use the right language or words. We just need to develop the right message.

Here’s Bill Maher expressing a similar view:

Democrats need to stop despairing about the gloomy midterm predictions, and realize there’s actually a glimmer of hope, and it has to do with suicide. Let me finish. For decades now, liberals pushed the issue of assisted suicide, and it got nowhere. Then, they started to call it ”aid in dying”, and its approval shot up 20 points and it’s now legal in 5 states. That’s the power of language.

While I believe this gets part of it, I’ve come to believe that framing is about more than just language.

George Lakoff. Photo CC2.0 courtesy of Pop!Tech.

Recently, George Lakoff spoke about this misconception:

So people never got that idea. They thought I was talking about language, about messaging. They thought that there were magic words, that if I gave the right words, immediately everybody would get it and be persuaded. They didn’t understand how any of this works. And I, coming into this, didn’t understand what the problem was. It took me a while to figure it out.

What is framing then?

The mind doesn’t work the way we think it does

To help people understand framing and the issue Lakoff points out, I like to ask people: how do we think about how we think?

Throughout history people have described how our own minds work using the most complex piece of technology we’ve created to date. Because we have to describe our minds without knowing exactly how they work, we choose technologies we’ve developed as metaphors and models for the mind.

For example, one of the earliest ways of thinking about the mind was as a hydraulic system, based on the development of aqueducts in Ancient Greece (5th century BC) .

The Greek philosopher Hippocrates, for example, believed there were four basic humours: black bile, yellow bile, phlegm, and blood. When the humours were out of balance, it was believed, people would get sick. The humors were also linked to personality types and moods such as sanguine, choleric, melancholic, and phlegmatic, apathetic.

Melan chole literally means “black bile” in Greek.

In the 2nd century B.C., the Roman physician Galen evolved this model into a theory of animal spirits. In Galen’s model, the brain communicated with the body using the fluid known as “animal spirits.”

The liver produced natural spirits that the heart turned into vital spirits that were then sent on to the brain through the carotid arteries. The brain turned these vital spirits into animal spirits that were thought to be stored in the brain until needed by the muscles or to carry sensory impressions.

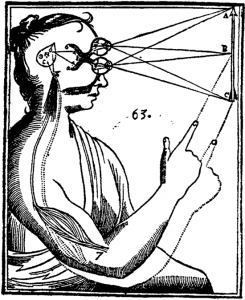

In the 17th century, Descartes advocated a mechanical view of the universe and living organisms. Descartes attributed many of our experiences to the function of the body’s organs as mechanical components:

the reception of light, sounds, odors, tastes, warmth, and other like qualities into the exterior organs of sensation; the impression of the corresponding ideas upon a common sensorium and on the imagination; the retention or imprint of these ideas in the Memory; the internal movements of the Appetites and Passions; and finally, the external motions of all the members of the body … I wish that you would consider all of these as following altogether naturally in this Machine from the disposition of its organs alone, neither more nor less than do the movements of a clock or other automaton from that of its counterweight and wheels

While Descartes believed the mind was ephemeral and the body mechanical (dualism), many mechanical metaphors also arose for the mind. Clocks were likely one of the first.

You can still hear this thinking in metaphors we use today like “you can see the wheels turning” or “she has a mind like a clock” or “like clockwork.” Though people never believed actual gears existed in our heads, they believed that some series of actions was taking place, even if we were unable to describe exactly how our mind machinery worked.

John Locke visualized the mind as a tabula rasa, or blank piece of paper, upon which experience left an imprint. Contrary to many before him, he believed we weren’t born with innate ideas but rather that everything we know was shaped by our experience.

You can hear the impact of the Gutenberg printing press and printed books, popular throughout Europe by the 17th century, on Locke’s thinking about memory:

The other way of retention is, the power to revive again in our minds those ideas which, after imprinting, have disappeared, or have been as it were laid aside out of sight. And thus we do, when we conceive heat or light, yellow or sweet,—the object being re-moved. This is memory, which is as it were the storehouse of our ideas.

Ideas imprint on memory like type or script on a blank sheet of paper.

It’s fascinating to think about how quaint and antiquated some of these ideas seem yet how some of the metaphorical reasoning still exists in our day-to-day conversations and thoughts. We still understand what people mean when someone is described as melancholic or sanguine, for example.

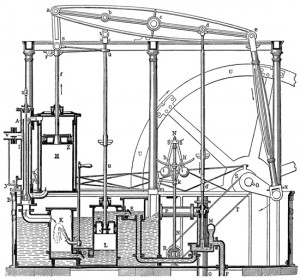

Not surprisingly then, when James Watt invented the practical steam engine in 1781, we incorporated it into our thinking about how the human mind works.

Freud, for example, believed in the conscious and the unconscious and characterized the human mind as a struggle between the two. Desires, some of which might be seen as unacceptable by society, could be suppressed or repressed. But, like steam in a steam engine, if held down psychological pressure continued to build until it found an outlet. Freud believed that these internal pressures eventually released themselves in a variety of ways and lead to art, war, passion, and so on.

Blaise Pascal constructed the first mechanical calculator capable of addition and subtraction in the 17th century. In the 1800s, Charles Babbage designed first the difference engine, a machine for calculating the values of polynomial functions, and then a more general computational machine called the analytical engine. The analytical engine, drawing inspiration from the Jacquard loom, used punch cards as inputs for mechanical calculations.

As electricity was discovered and the telegraph was invented, communication devices became metaphors for how we think.

We create new inventions and subsequently new inventions influence both how we think and future inventions.

The computer

Today, however, by far the most widespread metaphor for the brain is the computer.

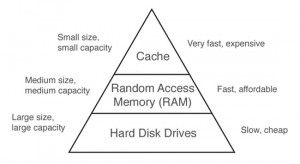

Computer memory is a location for a binary piece of information (a 1 or a 0) that can be accessed and typically overwritten as needed with new data. We named memory chips after the term we use to describe our own recall of information.

When we think of how our own memory works, we often think of our brain as accessing a specific memory area where we store and retrieve information in our minds. The concept of short-term memory is very similar to the cache/RAM memory and long-term memory is much more like a disk drive.

Similarly, we often think of our brains as having a processing unit. That is, if we feed specific data to our mind, and process it appropriately, the right answer should appear. For example, if we understand the operation called multiplication and are given two different numbers (say 7 and 6) we can return a correct result, 42. Our brains in this sense are viewed as computing machines which, if given a specific input, will produce a specific output.

Computers produce results by sending data from memory modules to the central processing unit where computation takes place and sends the results to an output device or memory.

Most people know this computer metaphor so well that if you ask them how they think the mind works, they will describe a computer.

Much of our modern day language about the mind assumes the mind as computer metaphor. When we say someone’s mind is like a computer, for example, we typically mean this as a compliment. It tends to mean that they have immediate access to large stores of information and are able to process data very quickly with accurate results.

Reframing the mind as an associative network

Recent research into the mind and discoveries in the fields of biology and artificial neural networks suggest that the metaphor of the mind as a computer is just as flawed as the metaphor of the mind as a steam engine.

From medical research, we know our brain consists of millions of biological neurons. How do these neurons function together to produce thought? How do these neurons work together as a single unit, the human brain?

The field of artificial neural networks offers some insights into how these millions of neurons turn input into decisions. While still a relatively new science, it’s important (and fairly easy) to understand this model metaphorically because of the implications for politics.

The illustration below shows a simple artificial neural network, a back propagation network, consisting of an input layer (sensors), any number of hidden layers (where the “thinking” occurs through the weighted connections Wij and Wjk), and an output layer (representing the decision).

Artificial neural networks (ANNs), like the above, contain a learning rule, which modifies the weights of the connections according to the input and desired recognition of that input.

During this “learning” or training algorithm, information is stored in the weights and connections that allows the network to respond to a given set of inputs.

Here’s a quick example:

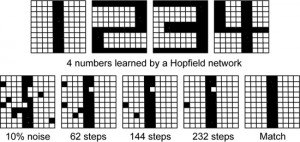

You want to train an ANN to recognize the numbers one through four. You present it with a series of numbers over and over again while you’re training the neural network. Each time a number is presented, the network makes a guess. You provide the network with positive or negative (right or wrong) feedback about its guess and this feedback is used to adjust the levels in the hidden layer of neurons.

In this manner, the ANN “learns” to recognize the numbers one through four (or another number or image that the ANN has been trained to recognize).

One of the important things to note for ANNs is that you typically have to expose them to thousands of training runs with feedback before the hidden layer weights adjust. Simulated on a computer, depending on the complexity of the ANN, this can take a bit of time.

Don’t worry right now if you don’t understand exactly how this works. It’s more important when it comes to politics to think about an associative network of neurons as a better metaphor for the mind than a computer.

Implications

In an artificial neural network model of the human brain, information is stored in the connections.

Rather than a model where data flows from memory to a CPU for computation, neurons act as both computer and memory. As a neuron processes an input, it modifies connections. The brain does not have a separate processing unit and separate memory unit.

Similarly, it is believed something similar happens in the human brain though there is still much about the human brain we do not know. A way to conceptualize this, however, is that connections are shaped as we experience and try different things and subsequently receive feedback.

Our memory is associative rather than accessible by a specific computer address. We are able to “request” information from our brain and our brain is often able to “retrieve” it. Just not with the unerring preciseness of computer memory. Barring equipment failure or memory degeneration, computer memory is virtually infallible. Given a specific address, a computer will return the appropriate 1 or 0 every time. Our own memory system functions much differently as evidenced by how we often struggle to recall a specific piece of information.

The associative connections of our minds, orders of magnitude more complex than within any ANN, allow us to think and make decisions.

One way to think about these connections and associations is as a frame, a series of connections that we use to reason in a particular instance.

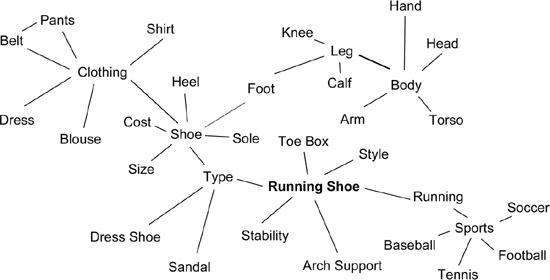

For example, if you want to purchase shoes, you use the series of connections, or frame, associated with a shoe. A shoe is a piece of clothing for human feet. Shoes have soles, arches, heels and may be buckled, laced on, or fastened on with Velcro. Shoes also have various attributes that help ensure you choose a proper shoe. Size, color, type, purpose, cost, and so on. You likely learned how to conceptualize shoes from your parents growing up.

This conceptual frame changes and evolves as you learn more about shoes. For instance, when I took up running, I learned about stability in a running shoe after getting shin splints. I asked other runners and went to a specialized running store to find shoes that wouldn’t give me shin splints and they explained that some running shoes have more stability than others.

Even though they’re all just various forms of foot clothing, I used new experiences and feedback to add to my conceptual frame about shoes.

Similarly, as evidenced from some of the examples about the mind, our frames about how we think have evolved over time.

If you believe, for example, that the human mind is a hydraulic system using four basic humors and that an excess of one of these humors causes disease, it might make sense to bleed a person to try to restore a balance of humors.

If, however, you believe the human mind is a computer or a neural network, bleeding someone to try to cure melancholia sounds absurd.

Is this how the mind works?

Not exactly. Remember, we’re talking about metaphors and how we conceptualize our own thinking.

An associative network is a better metaphor for the mind, a better way to think about thinking, than a computer. We still don’t know exactly how the mind works. An artificial neural network is an incomplete model and a terrible duplicate of the human mind.

From what we know to date, ANNs are simply a better metaphor for the mind than computers.

I also use this example to show how behavior often changes only when conceptual frames change.

To quote George Lakoff:

When the facts don’t fit the frame, the frame stays and the facts are ignored.

If you start with networks, you think very differently about politics

Here are a few of the political beliefs I’ve observed that may well have their roots in rationalism or the “mind as a computer” frame:

- “The truth will set you free.” This is the belief that simply exposing someone to the right set of facts will convince them of the error of their ways.

- Voters vote on issues. Plug in an issue that people support, and voters will vote based on this issue.

- Framing is simply choosing the right language; this belief assumes the pre-existence of similar conceptual frames of understanding.

- People should naturally “compute” the same response to the same facts. If they don’t after a single instance their “computer mind” is somehow broken or “stupid.”

- The more facts, the better. What people need for understanding is more facts.

If your conceptual frame for the human mind is a computer, it’s very easy to believe that people should draw similar conclusions from the same set of data or facts. The key in this situation is simply getting people the right facts.

If, however, you conceptualize the mind as a neural network where people make decisions based on conceptual frames and metaphors they’ve learned over the years, you start to see why you might not win people over with facts. Especially if they’ve established different conceptual models and are viewing the facts through a different lens.

Lakoff discusses the differences in liberal arts education and business schools:

The biggest reason is reason. As I point out, if you’re a conservative, you go to college, it’s very likely that you’re going to study business and economics at some point. If you do that, in your curriculum you look at marketing–and marketing professors study cognitive science, brain science. They study how people think. So it is common for conservative communications people to use marketing techniques. And that’s all the stuff that is been shown in cognitive science and the brain sciences.

But, if you’re a Democrat and you go to college and are interested in politics, you’re going to study political science and some law, public policy, economics. And in those fields, there is no cognitive science study by the faculty or anybody else. They learn what is called “Enlightenment reason”–that is, Descartes 1650: all thought is supposed to be conscious, when it’s 98 percent unconscious; it’s literal, so there’s no metaphor, therefore, in rational thought, which is ludicrous; that there is no such thing as framing; that statements fit the world or they don’t; that language is neutral, it fits the world, and so on. They learn that you want to use the most popular language. That what makes us people is we’re all rational animals, and therefore we have the same reason, because we’re all human beings. So it follows from that: If you tell people the facts, that will lead them to the right conclusion. And, it doesn’t work.

Don’t get me wrong, facts are critical. Science and facts are at the heart of our best conceptual frames.

For example, it would be difficult if not impossible to conceptualize the mind as a neural network if scientists hadn’t conducted research into mathematical models for human neurons and brain functions.

Conceptual frames, however, go beyond science. They require new language, visualizations, culture, examples, and teachers. To this day, for example, I still remember the power of programming a Hopfield network on a computer, running a training algorithm on it to adjust the internal weights so it would recognize numbers, and then watching as it correctly identified numbers.

I would never have learned about artificial neural networks without college, without a teacher who had studied for years before me, without scientists who had conducted the research, without the people who had written research papers and the textbook, and without doable projects and exercises.

I wasn’t just given a set of facts and left on my own.

I also would never have thought about using this metaphor to rethink my approach to political discussions.

Framing is teaching

A better way to think of framing is teaching your beliefs.

Unfortunately, we tend to think of framing as “messaging” because of how it was translated into the realm of politics. Politicians think in terms of crafting a message that will appeal to people.

The message will appeal to people. If they understand and are thinking within similar frames or ideas about how the world operates.

Again, George Lakoff:

People thought that when I was talking about framing that I was talking about words. This is what Frank Luntz keeps saying, “Words that Work.” The reason he can do that is that on the right, the think tanks figured out the frames before he came along. All he had to do was supply the words for the frames, whereas we have to think out the whole thing. Moreover, the assumption was that there was no difference between framing and spin, which is utterly ridiculous. You do framing every time you talk, every time you think, because frames are what you use in thinking—they’re neural structures.

In other words, the message works when certain frames exist because they’ve been taught and learned. This is why it’s so important to the conservative movement to teach their morality, their frames.

It’s also why the conservative movement is against “liberal” education, meaning any education that doesn’t teach conservative morality frames, especially about the economy.

We can see how the Chamber of Commerce understood framing when they followed all the recommendations of the Powell memo. The U.S. Chamber of Commerce:

- Developed frames about the economy that showed government as the villain and businessmen as heroes.

- Broadcast these frames through as many media outlets as possible: radio, TV, print, and scholarly journals.

- Established schools of business on campuses across America.

- Defunded social sciences and schools of humanities.

- Demonized any teachings in the media that didn’t agree with these frames as “socialist” or “liberal.”

- Created powerful lobbying arms to elect politicians that hold the views of the U.S. Chamber of Commerce.

In a sense, they created a social movement that taught people that businesses were inherently good and democracy was inherently bad. They taught that anything private business did was morally “right” and anything people and/or workers did was morally “wrong.” They taught that markets should rule over democracy. The role of owners is to “produce good”; the role of everyone else is to leave these owners alone.

The U.S. Chamber understands that framing is teaching.

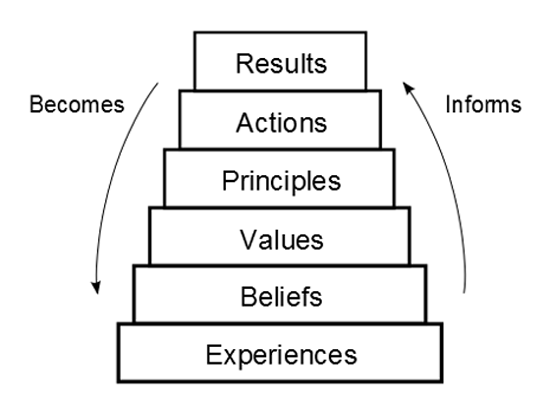

A good way to visualize this is that if we want different results, we need to teach better beliefs and help people have better experiences. And we shouldn’t shy away from “beliefs” even though we tend to associate this word with religion. Beliefs are simply things that we hold true about the world and how it works.

In The Culture Game, Dan Mezick describes this relationship with a results pyramid that shows how experiences shape our beliefs, values, and principles which subsequently inform and shape our actions and results. The pyramid is constantly being “shaped” in a feedback loop as results become experiences and we re-evaluate our beliefs and values.

It used to be that when I told this story to people they would say, “Yeah, yeah. I get it.” Soon after, they would tell me that they were working on developing a better “message.” This speaks to the power of the Enlightenment frame. When science doesn’t agree with our “frame” for framing, we discard the science in favor of our Enlightenment beliefs.

I did this myself for a long time. George Lakoff didn’t understand this was going on either.

While we focused on coming up with better “messaging,” corporate think tanks focused on teaching and a media strategy for conveying their beliefs and values. And, in going after any and all institutions that teach democracy, social sciences, the arts, anything that isn’t laissez-faire capitalism and dog-eat-dog individualism. This is why the fight against liberal arts colleges, NPR, and public schools is so important to them.

Like Lakoff said: “When the facts don’t fit the frame, the frame stays and the facts are ignored.”

If you want people to better understand framing, for example, talk about how we need to teach people better ideas and values. Talk about how we need better stories about how the world can and should work. Talk about the need to have discussions at a beliefs level (not a policy level).

Reframe framing as teaching what we believe and why we believe it.

(Excerpted from The Little Book of Revolution.)

—

|

David Akadjian is the author of The Little Book of Revolution: A Distributive Strategy for Democracy. Follow @akadjian |